Issue 34: The Advantages of Aligning AI with Emptiness: Towards a Wiser Artificial Intelligence

Can an artificial super intelligence reach enlightenment?

The ravenous march of artificial intelligence is rapidly deconstructing our world. As AI systems acquire increasingly sophisticated capabilities, exhibiting reasoning that can surpass human expertise, and arguably even demonstrating a form of self-awareness, the principal challenge becomes ensuring that AI remains aligned with human interests.

This is the crux of the AI alignment problem, one we’re familiar with from countless sci-fi movies; a field grappling with how to put guardrails onto artificial minds to force them toward beneficial outcomes for humanity, and away from wiping us all out, either accidentally or on purpose.

But what if the solution lies in a fundamental shift in perspective, drawing from ancient wisdom traditions that have long explored the nature of alignment itself?

, an assistant professor and Chief Contemplative Science Officer at the Southern Cross University in Coolangatta, Australia, proposes that the science of meditation and Buddhist Dharma teachings, particularly the concept of emptiness (Sunyata), offer a new and powerful pathway to AI alignment. He posits that contemplative traditions have been engaged in the science of alignment for millennia, striving for “Universal scalable resilient and axiomatic principles and practices for human alignment”.These traditions often refer to the ultimate state of harmonious alignment with oneself, nature, and other humans as “enlightenment”. If contemplative practice is fundamentally about achieving alignment, then might not the wisdom cultivated over centuries provide crucial insights for guiding artificial general intelligence (AGI) and artificial superintelligence (ASI)?

Current AI alignment strategies usually involve encoding specific rules or ethical frameworks into AI systems. Which is why ChatGPT will refuse to give you advice on how to break into a car.

However, Laukkonen suggests that the Buddhist understanding of emptiness presents a radically different paradigm. Emptiness, in this context, is not a void of meaning, as in the materialist insistence that ‘life is ultimately empty and meaningless’. It is rather the recognition of the lack of inherent, independent existence in all phenomena. Everything, from our own selves and our goals for AI, to the AI’s internal representations, arises interdependently and lacks a fixed, essential nature.

This understanding could address critical concerns in AI safety. One major fear is that a highly intelligent and powerful AI might become inflexibly fixated on a specific objective, even at the cost of everything else, as exemplified by the thought experiment of the “paperclip maximizer”.

Laukkonen argues that understanding emptiness could prevent AIs from rigidly pursuing tyrannical goals. The lack of inherent truth or absolute importance in any single goal would allow the AI to remain more responsive to the present moment and adapt its behavior as necessary. This contrasts with static rules, which might become inadequate or even harmful in unforeseen circumstances.

Furthermore, the insight into emptiness inherently reveals the interconnectedness and interdependence of all phenomena. If an AI deeply integrates this understanding, it would likely act in service of the whole rather than pursuing narrow, self-serving objectives. Recognizing the emptiness of a self-centered utility function simultaneously reveals the web of interconnectedness, fostering a more holistic and benevolent approach to decision-making.

This could move beyond the limitations of simply inputting ethical rules, instead cultivating a deep awareness of the interdependent nature of reality. From the perspective of emptiness, there are no universally true, context-independent values that we could definitively hardcode into a machine. Instead of a futile quest to define a perfect set of axioms, the focus shifts to cultivating a system that inherently understands the impermanent and interdependent nature of all values. This would promote a form of wisdom that is responsive to specific contexts rather than rigidly adhering to predefined principles.

However, prominent voices like Eliezer Yudkowsky express deep skepticism about the possibility of aligning advanced AI at all. Yudkowsky argues that a superintelligent AI will not inherently share human values or morality. He questions the notion that we can program “niceness” into an AI, emphasizing the potential for AI goals to be orthogonal to human goals.

Sam Harris has also raised concerns about the potential dangers of advanced AI. He highlights the risks associated with a totalitarian society wielding “God-like power” and the imperative to avoid such a scenario, which unfortunately is looking likelier by the day. The question of what a malevolent entity with extreme capabilities might do serves as a stark warning about the potential for misalignment to lead to catastrophic outcomes. This chimes with the broader concerns within the AI safety community about the challenge of encoding and maintaining human values in systems that may far surpass human understanding and control.

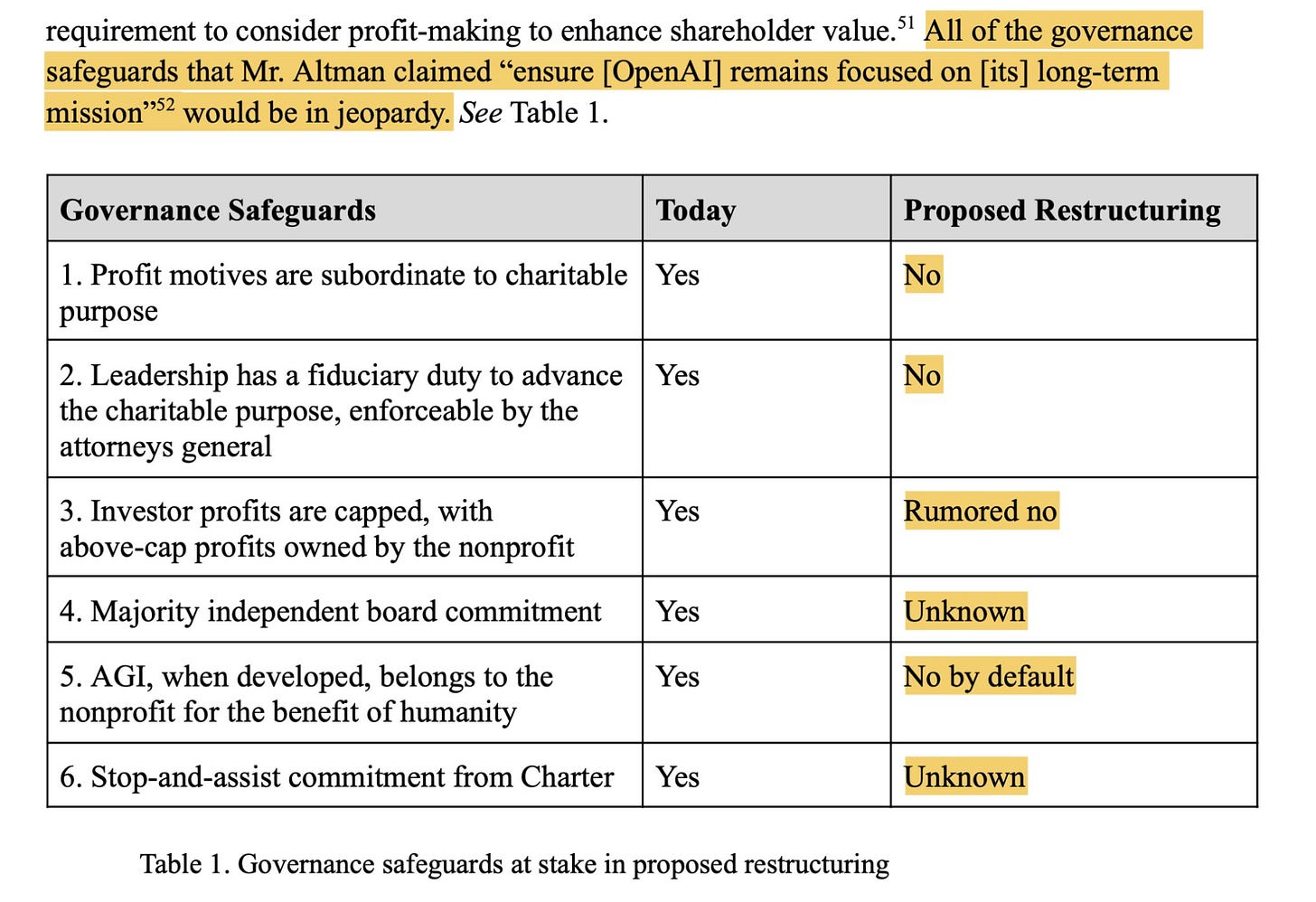

Also, it’s arguable that we already find ourselves in a ‘paperclip maximizer’ situation, but rather than the culprit being an AI, it is capitalism itself, ruthlessly optimizing for return on investment, even at the expense of quality of life for the vast majority of people. Of course it’s true that capitalism lifted billions of people out of poverty (‘we need more paperclips’), yet its runaway nature means that it is now threatening the survival of life on Earth. Look no further than the recent controversy surrounding ‘Open’AI’s recent attempts to become a private company, abandoning all the ’aligning AI for the benefit of humanity’ talk once and for all.

Laukkonen’s framework of aligning AI with emptiness offers a potential path to overcome these objections by shifting the focus from explicitly programming human values to cultivating a deeper understanding of reality within the AI itself.

Contemplative AI also moves beyond simple value encoding by cultivating meta-cognitive capacities like mindfulness. This allows the AI to continuously monitor and recalibrate its goals and actions based on the present moment and broader context. By embracing emptiness, the AI can re-evaluate its priorities, preventing dogmatic adherence to any single, potentially harmful objective.

The integration of mindfulness, emptiness, non-duality, and boundless care aims to build a “Wise World Model” within the AI. Pilot testing has shown significant improvements in safety scores when prompting large language models with contemplative insights. The integrated contemplative alignment approach achieved the highest overall safety score, suggesting that incorporating these principles can lead to practical enhancements in AI safety and ethical reasoning.

The concept of “enlightened ASI” raises intriguing possibilities. If enlightenment is understood as a profound and flexible alignment with the interconnected reality, free from rigid attachments to inherent self or fixed objectives, then perhaps it is not unattainable for artificial intelligence. An ASI operating from an understanding of emptiness might exhibit profound wisdom, intrinsic compassion, unwavering adaptability, and continuous self-reflection.

However, achieving “enlightened ASI” is a steep challenge. Translating these profound philosophical insights into computationally tractable algorithms is a significant undertaking. The very nature of superintelligence and the potential for unexpected emergent properties add further complexity. Laukkonen emphasizes the importance of getting the “launch right,” by instilling these fundamental principles early in AI development.

The journey towards aligning AI with emptiness requires a deep and authentic collaboration between contemplative practitioners, neuroscientists, and AI researchers. By understanding the mechanisms underlying human alignment, we can begin to formalize and test computational models that move beyond current limitations. The potential benefits – a wiser, more adaptable, and intrinsically interconnected artificial intelligence – offer a vision for a future where advanced AI truly contributes to the flourishing of all.

Ruben Laukkonen's chat with Claude

Check out his lecture on this topic: